An understanding of cancer, its treatments and how to care for people with the condition come from specialised, in-depth education and training. Continuing professional development (CPD) is therefore essential, especially in the case of cancer sub-specialties, such as those for specific tumour types. In the case of these sub-specialties highly specialised knowledge and understanding are needed to diagnose, treat and care for people with specific cancers safely and competently. In the case of rarer tumours such as cutaneous T-cell lymphomas (CTCL), it is necessary to provide CPD that is both at an advanced level and proportionate to the size of the workforce managing patients with rarer cancers.

CTCL is a rare group of complex diseases that have an annual incidence of 0.7 per 100 000 population (Gilson et al, 2019). They are challenging to diagnose, with an average time to diagnosis of 3 years (Scarisbrick, 2018), with one of the main issues being a difficulty in distinguishing CTCL from benign inflammatory disorders, both clinically and histopathologically (Miyagaki, 2021). Early diagnosis is crucial, because it reduces the use of inappropriate treatments, minimises the effects of the disease and reduces patient anxiety, but this requires specialist and advanced knowledge.

CTCL has more than 20 subtypes, and presentations can range from indolent and slow-growing disease with minimal skin involvement to aggressive variants with a very poor prognosis (National Institute for Health and Care Excellence (NICE), 2010). Care of patients with advanced skin disease can be challenging, because they can experience pain, pruritus, and treatment toxicities as well as body image and psychosocial issues and often require complex skincare management (Scarisbrick, 2022). Treatment regimens are complex and can include skin-directed therapy, retinoids, radiotherapy, cytotoxic chemotherapy, monoclonal antibodies, extracorporeal photopheresis and stem-cell transplantation. Randomised controlled trials are rare, with most guidelines being based on retrospective cohort studies and expert opinion (Willemze, 2018). As CTCL cannot be cured, treatment selection is based on not only clinical effectiveness but also reducing symptoms of the disease and improving quality of life (Sokołowska-Wojdyło, 2015).

Management of CTCL at a local level can be fragmented, with care provided by dermatologists, oncologists or haematologists, depending on the services available. In addition, highly specialist centres and shared-care centres are geographically separated and both are often formed of small teams. As clinicians may see only a few patients with CTCL in their practice, some lack confidence and expertise in managing them. Given CTCL is a rare disease and effective treatments have yet to be established, it is critical that patients have streamlined pathways that allow rapid access to centres with experience of and expertise in treating the disease (Gilson, 2019). Such pathways provide coherent guidance as to which patients require referral to one of the seven supra-regional centres nationally and where they will have access to expert dermatologists, oncologists, histopathologists and specialist nursing teams (NICE, 2010).

The team in the authors' local supra-regional referral centre recognised the need for high-quality, specialist education to support colleagues in other care settings to overcome problems in treating and caring for this patient group. However, they were also aware of difficulties in delivering highly specialist education about rare cancers, including reaching and engaging with clinical staff not working in the specialist field and obtaining funding for educational initiatives (Joint Action on Rare Cancers, 2014).

One method of providing highly specialised CPD is through topic-specific education meetings, webinars, seminars and masterclasses. Agreement was reached to deliver a 2-hour specialist education webinar, but this needed to be evaluated for both funding purposes and quality assurance. Unlike longer programmes or courses of learning, there are no well-established or validated evaluation measures for one-off education events. The authors therefore applied a conceptual model of evaluation for continuous medical education to both comprehensively evaluate the CTCL webinar and test the validity of using such a measure to evaluate one-off CPD events.

Methodology and method

The evaluation model

CPD activities where evaluation outcomes are planned before the educational programme is developed can be more effective in achieving desired outcomes than planning the education programme first.

The conceptual model of Moore et al's (2009) expanded outcomes framework was used to plan the evaluation outcomes for the CTCL webinar. Moore et al (2009) proposed that their conceptual model should be used to guide decision-making about CPD, rather than prescribe evaluation of every CPD activity. Therefore, there is some flexibility in the application of this conceptual framework when evaluating CPD activities.

Because of limitations in time and funding, the authors developed a protocol for evaluating the webinar using level 1 to level 5 outcomes of Moore et al's model (Table 1). Data about or from patients to evaluate level 6 and level 7 outcomes using this model were not collected.

Table 1. Evaluation of cutaneous T-cell lymphoma webinar using Moore et al's (2009) model level 1–5 outcomes

| Level | Outcome | Plan to achieve outcome | Method of evaluation |

|---|---|---|---|

| 1 | Learner participation |

|

Registration records of learnersPolling questions at the start of the webinar about learners' demographic details |

| 2 | Learner satisfaction |

|

Responses to webinar evaluation in questionnaire 1 (immediately after the webinar) |

| 3a | Declarative knowledge | Polling questions based on (declarative) knowledge covered in presentations about early diagnosis, referral pathways and criteria, diagnostics, treatment and clinical trials | Responses to polling questions at the start and end of the webinar. Formative feedback provided based on responses to polling questions and panel discussion to illustrate key learning outcomesQuestions in questionnaire 1 |

| 3b | Procedural knowledge | Case study-based discussion to evaluate learners' level of confidence in clinical decision-making (procedural knowledge)Questions were posed before and after presentations, case studies and during panel discussion on the case studies | |

| 4 | Self-report of competence |

|

Responses to polling questions at the start and end of the webinarResponses to webinar evaluation questionnaire 1 immediate after the webinar and questionnaire 2 completed 6–8 weeks later |

| 5 | Self-report of performance |

|

Responses to questionnaire 2 completed 6–8 weeks after the webinar |

Outcome levels (first and second columns) were used to plan how the learning outcomes would be achieved (third column) and evaluated (fourth column)

Data collection

The question criteria and formatting for the polling questions posed to learners at the start and end of the webinar were developed jointly by the academy and clinical team. These included questions about learners' roles, place of work and experience of working with people with CTCL, as well as learners' confidence about the diagnosis, treatment and care of a patient with the condition.

The project lead developed questionnaire 1 (completed by learners immediately after the webinar) and questionnaire 2 (completed 6–8 weeks later). The questionnaires were peer reviewed by the clinical team and the funder and recommended edits were made. Questionnaire 1 collected data about learner satisfaction with the quality of the webinar and learners' knowledge and confidence about the diagnosis, treatment and care of a person with CTCL. Questionnaire 2 collected data about learners' knowledge and confidence about diagnosis, treatment and care of a person with CTCL and implementing what they had learnt in practice. The data collection protocol was approved by the academy's quality assurance and standards committee.

At the start of the webinar, learners were invited to submit anonymous responses to the pre-webinar polling questions. It was explained to them that the anonymous responses would be used to evaluate their learning overall and the quality of the webinar. Case studies were presented during the webinar to facilitate engagement and discussion between the learners and the speaker panel. At the end of the webinar, learners were again invited to submit anonymous responses to the post-webinar questions, so that any changes in responses before and after the webinar could be identified as part of the evaluation.

At the end of the webinar, learners were informed that they would be sent an email containing a link to an online questionnaire. It was explained that the purpose of this questionnaire was to evaluate the quality of the webinar and the learners' perception of their knowledge about CTCL immediately after attending it. Learners were informed that completion of questionnaire 1 was voluntary and responses would be anonymous. Questionnaire 1 included a request for consent to be sent the second questionnaire 6 weeks after the date of the webinar. The learners who agreed to be emailed questionnaire 2 were asked to provide their email address.

Identifiable information was removed from the spreadsheet created from the learners' responses to questionnaire 1 by the education administrator, who was not involved in data analysis and reporting. Six weeks after the webinar, the education administrator emailed the learners who had consented to be sent questionnaire 2 with a link to the online questionnaire and information about completing it. A second spreadsheet for data analysis was populated with the anonymous responses to questionnaire 2.

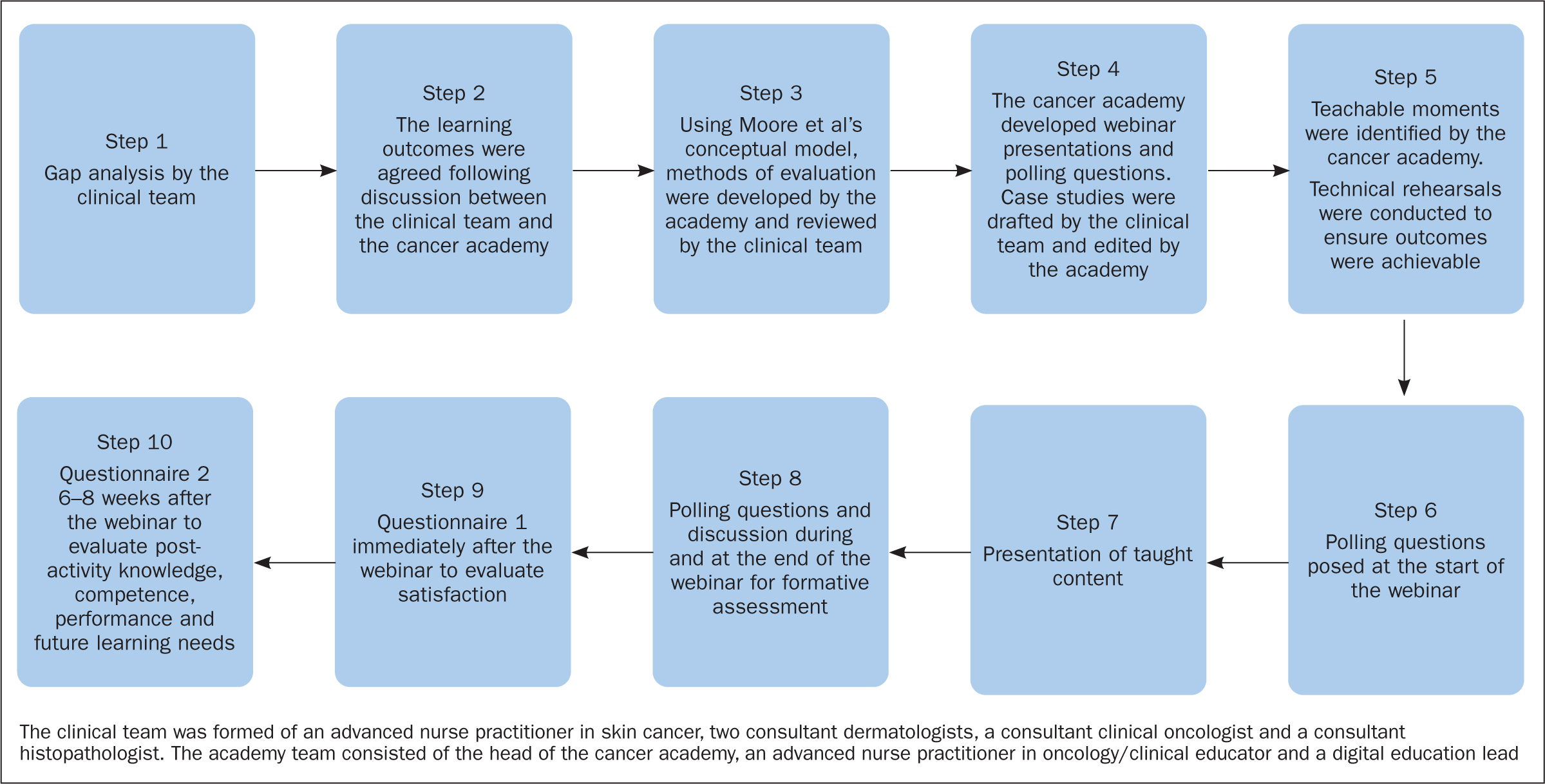

Planning the webinar and evaluation

A core aspect of Moore et al's model is assessment. PRIME's approach to needs assessment, educational activity development and outcomes assessment was used (Greene and Prostko, 2010) (Figure 1) for applying levels 1–5 of Moore et al's conceptual model. The needs assessment was conducted in step 1 and confirmed in step 2; formative assessment was provided during the webinar (steps 3–8); and summative assessment was comprised of self-reported assessments of step 9 (satisfaction) and step 10 (performance).

Once the learning outcomes, method of evaluation, webinar programme and polling questions had been agreed, the teaching team developed items for questionnaires 1 and 2, which were reviewed by the clinical team. The questionnaires were formatted using an online questionnaire tool (Typeform) and shared with learners using the email address that they had consented to be used for this purpose.

Since the evaluation was a requirement of the funding, the questionnaires were also shared with the funder for review before their use. Raw data responses to questionnaires were stored following GDPR regulations and viewed only by the academy team.

Analysis of findings

Descriptive statistics (proportional data and averages) were used to analyse and summarise the quantitative data from questionnaire 1 and learner demographics. Comparative data analysis was conducted to identify differences in responses between before and after webinar polling questions and responses to questionnaire 1 (t=0) and questionnaire 2 (t=6–8 weeks after the webinar). Free-text responses made at the end of the webinar and provided in response to questionnaires 1 and 2 were summarised using content analysis. Because of the small number of respondents at the second and third time point, there were not sufficient data to conduct inferential analysis to test for significant differences in levels of confidence.

Results

Level 1 outcome: learner participation

A total of 201 people registered for the webinar, of whom 104 attended. The majority of those who provided demographic information were dermatologists (23%), nurses (clinical nurse specialists, oncology nurses and dermatology nurses; 23%) and oncologists (17%). Other respondents included pathologists, GPs and allied health professionals.

Almost half (45%) of respondents worked in a dermatology unit, 42% in a cancer centre and others in a cancer unit or primary care. More than one in three (38%) respondents had worked for over 5 years in their current role, 25% for 3–5 years and 38% for fewer than 3 years. The majority (83%) of respondents had cared for or treated someone with CTCL.

When asked about what treatments were available for people with CTCL in their place of work, 76% reported phototherapy, 70% systemic anti-cancer therapy, 58% localised superficial radiotherapy, 47% extracorporeal photopheresis, 44% stem cell transplant and 26% total-skin electron beam therapy.

Level 2 outcome: learner satisfaction

Thirty-one out of 104 learners responded to questionnaire 1 immediately following the webinar. The majority of learners agreed or strongly agreed the webinar was an effective way to learn, enjoyable, relevant to their role and interesting (Table 2). Content analysis of 50 free-text comments identified that, in order of prevalence, respondents wrote: ‘thank you’, ‘very informative’, ‘excellent’, ‘really useful’, ‘great speakers’ and ‘great session’.

Table 2. Learners' satisfaction with the webinar (n=31)

| Strongly disagree | Disagree | Neither agree or disagree | Agree | Strongly agree | |

|---|---|---|---|---|---|

| The webinar was an effective way to learn | 0 | 0 | 1 | 17 | 13 |

| The webinar was enjoyable | 0 | 0 | 4 | 19 | 8 |

| The webinar was relevant to my role | 0 | 1 | 5 | 18 | 7 |

| The webinar was interesting | 0 | 0 | 3 | 16 | 12 |

Level 3 outcome: declarative and procedural knowledge

Case studies were used to illustrate key principles and enable procedural knowledge to be developed. The majority of learners agreed or strongly agreed that the webinar increased their awareness of CTCL, understanding and knowledge of CTCL referral and knowledge of treating the condition in responses to both questionnaires 1 and 2 (Table 3). A pattern was seen in responses to all questions that fewer learners strongly agreed with these statements 6–8 weeks after the webinar compared with immediately afterwards.

Table 3. Learners' perceptions of their learning from the webinar (% respondents*)

| Strongly disagree | Disagree | Neither agree or disagree | Agree | Strongly agree | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Q1 | Q2 | Q1 | Q2 | Q1 | Q2 | Q1 | Q2 | Q1 | Q2 | |

| The webinar increased my awareness of CTCL | 0 | 0 | 0 | 0 | 13 | 31 | 55 | 54 | 32 | 15 |

| The webinar increased my understanding of how to recognise suspected CTCL | 0 | 0 | 0 | 0 | 13 | 15 | 58 | 69 | 29 | 16 |

| The webinar increased my knowledge of referral pathways and referral criteria | 0 | 0 | 0 | 0 | 19 | 23 | 52 | 62 | 29 | 15 |

| The webinar increased my knowledge of treatment of CTCL | 0 | 0 | 0 | 0 | 10 | 8 | 55 | 69 | 35 | 23 |

CTCL: cutaneous T-cell lymphoma

Level 4 outcome: self-report of competence

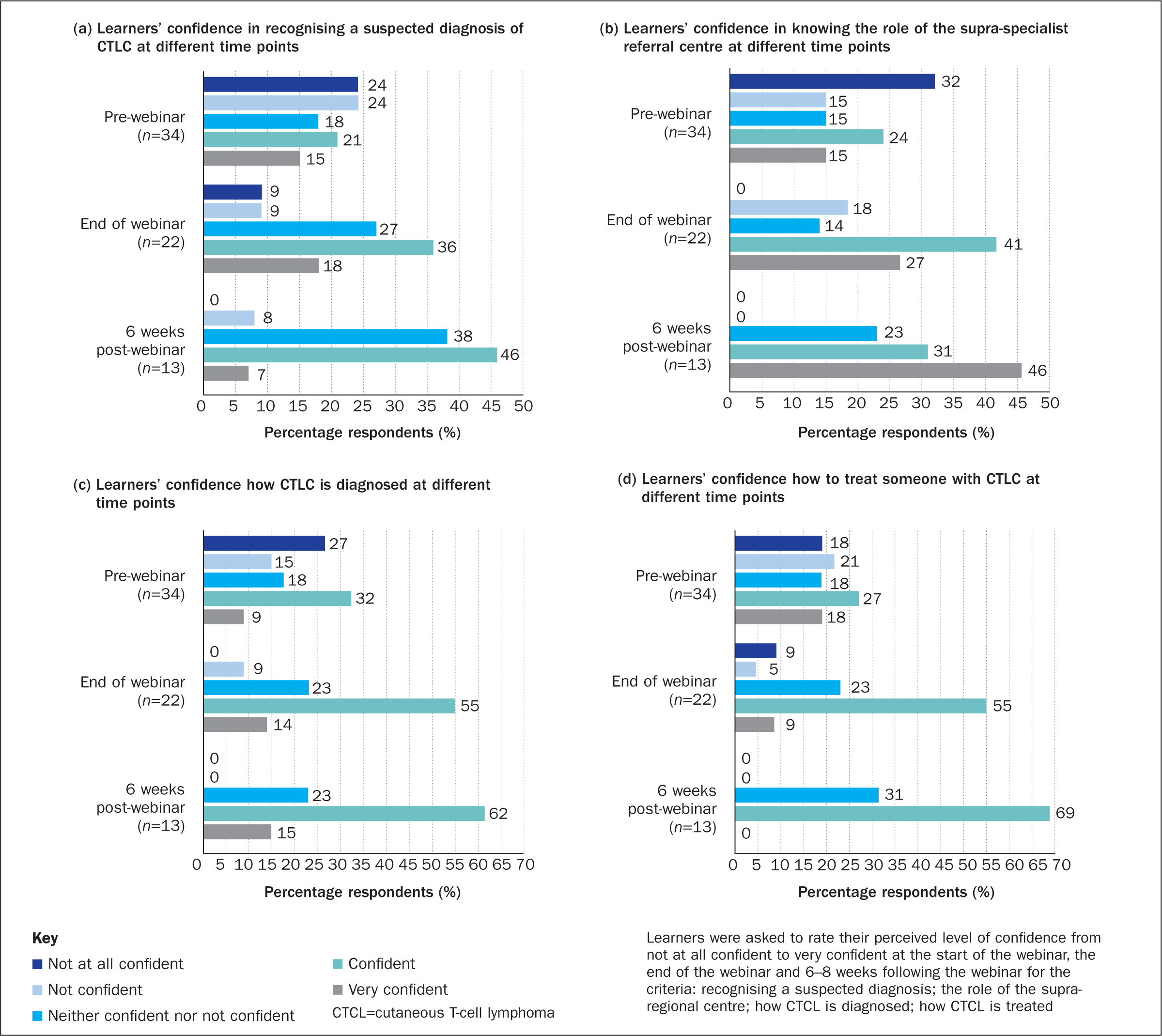

Fifty learners answered demographic questions before the webinar and 22 afterwards; 34 learners responded to pre-webinar polling questions about levels of confidence, 22 answered this post webinar and 13 learners responded to questionnaire 2 about their level of confidence. Figure 2 shows that the proportion of respondents who felt confident was higher 6-8 weeks following the webinar than immediately afterwards, but fewer respondents felt very confident. It is therefore likely that respondents who initially reported feeling ‘very confident’ found, on reflection, this reduced to ‘confident’.

Level 5 outcome: self-report of performance

Of these 13 respondents, nine agreed or strongly agreed that they had implemented what they had learnt in practice, and perceived their learning had improved patient experiences and outcomes. Four respondents gave a neutral response (neither agreed nor disagreed) to both of these questions.

Learners were asked both at the end of the webinar and in questionnaires 1 and 2 about other aspects of CTCL education they would they like to receive. In response to questionnaire 1, they said they would like ‘further discussion on staging and treatment options’ (n=1) and ‘more pathology-based talks’ (n=1). No responses were received for this question at the end of the webinar or in questionnaire 2.

Discussion

Arguably, webinars are a means of providing accessible, specialist education that is proportionate to the demand for such education; however, the authors questioned the effectiveness of delivering education on a complex, specialist subject via a webinar. They therefore conducted a comprehensive evaluation of a specialist 2-hour webinar on CTCL.

Their experience as supra-regional referral centre practitioners is that clinicians in other areas of practice can lack knowledge, skills and confidence in managing patients with CTCL. These gaps might account for the high number of referrals received by the supra-regional referral centre that do not warrant referral and, perhaps, a reluctance to provide shared care or manage patients outside the supra-regional referral centre. The results of the webinar evaluation showed that, although the majority of learners already worked in a dermatology speciality, were qualified for over 5 years and had experience of caring for patients with CTCL, there were gaps in their knowledge and scope to improve their knowledge of clinical care and referral pathways for these patients.

Online education, such as webinars, requires innovative approaches to overcome barriers to interactive learning that are created by the digital mode of delivery. For example, it is not possible to read non-verbal cues and have an awareness of whether learners are engaged in the learning activity if delivery is didactic. In addition, demonstrating competence is difficult to achieve in webinars, but case study-based discussion can be an effective method of ascertaining learners' competence in clinical decision-making.

The evaluation showed a high level of learner satisfaction. In addition, the learners reported an increase in their (declarative and procedural) knowledge about caring for patients with CTCL. Sometimes, educational webinars may improve knowledge temporarily, but this may not always translate into clinical practice. The authors therefore evaluated competence and performance and found that most of the respondents answering questionnaire 2 felt that they had been able to implement what they had learnt into practice and, more importantly, perceived that their learning had improved patients' outcomes and experience. The learners identified some specific future learning needs, which warrant the development and delivery of further education about CTCL. The findings of this evaluation indicate that a webinar is a satisfactory and effective way of delivering education on rarer cancers and, more importantly, learners perceive that their new knowledge can be embedded into clinical practice and positively affect patient outcomes.

There is scope to develop meaningful evaluation of education webinars so that key data are captured and reported. It is important to gather longitudinal data following the education, eg 6–12 weeks after the event; however, the method used needs to account for the time lapse between the education event and the data collection point. For example, asking learners to rate how well they perceive they have been able to apply learning to their practice on a scale of 0-10, where 0 is not at all and 10 is completely, may be a more proportionate metric to use after a one-off webinar. Another way of identifying learning several weeks after education delivery would be to ask what was the single main thing they had learnt.

Limitations

There were limitations with the approach used here to evaluate the webinar and the data collected. Because of the nature of the webinar, it was not possible to evaluate knowledge gained by conducting summative assessment (Oermann and Gaberson, 2019). Instead, self-reported perceptions of knowledge and confidence were collected from learners and formative assessment occurred during discussion of case studies in the webinar. Using self-reported responses about perceived competence and performance is common; however, self-reported outcomes have been found to correlate only moderately with the outcomes when independently observed (Dunning et al, 2004).

Furthermore, Allen et al (2021) argued that measuring outcomes of interventions has less rigour that conducting a programme evaluation that investigates what contributed to these outcomes. Programme evaluation has merit, but is challenging to achieve when education is delivered via a webinar, although case study-based discussion that requires learners to engage in conversation about clinical decision-making and explore their own thought processes is useful.

For granularity, the team structured survey questions so that learners rated their responses using Likert scale/ranked responses (Vogt et al, 2014), eg from very confident to not at all confident. This was insightful but, because the number of respondents to both questionnaires was small, it was not possible to conduct any meaningful subset analysis of these ranked responses. Collection of larger data sets can be onerous, so software that automatically collates and summarises the data is recommended.

The main limitation was attrition of respondents with each subsequent stage of the evaluation, which resulted in few responses to questionnaire 2. With small data sets, there is a risk of type II errors (Columb and Atkinson, 2015), so the authors took care not to read more into the results than the descriptive summary of the findings allowed and did not conduct inferential statistical analysis for this reason. Attrition and missing data from respondents not answering all of the questions meant that percentages had to be used to compare data sets; raw frequency (n) is reported to provide transparency about the data sets being compared.

Conclusion

This evaluation demonstrated that a webinar was effective in providing highly specialised education on CTCL. The authors argue that one-off education events should be evaluated but the evaluation must enable quality assurance.

For learners to develop and demonstrate competence, the inclusion of case studies with focused discussion between learners and educators is recommended.

Declarative and procedural knowledge gain was identified by using polling questions. Evidencing competence and performance in practice from education webinars is challenging, particularly in the longer term; however, learners can reasonably be asked to rate how well they have been able to apply their learning in practice and identify their main learning outcome to verify a change in performance.

KEY POINTS

- The diagnosis, treatment, management and care of patients with cutaneous T-cell lymphoma requires specialist and advanced knowledge.

- Providing specialist education to colleagues working in shared-care centres is difficult for highly-specialist centres to do as they are geographically separated and both are often formed of small teams

- Effective, highly specialist education can be provided through online learning, including educational webinars

- All education and training requires evaluation for quality assurance, but care is needed to ensure the evaluation is proportionate and meaningful

- Models of evaluation are helpful for both planning education events and developing methods of evaluation

CPD reflective questions

- Do you provide online education and training? If so, how does that vary from delivering face-to-face education and training?

- How do you ensure the quality of the education and training you provide?

- Do you use a model of evaluation? If so, what are its limitations? If not, would you consider using a model of evaluation and why?